Process scheduling for multicore multithreaded (SMT or HT) systems adds a new challenge to an operating system’s process scheduler. Two threads scheduled on different cores will run faster than two threads scheduled onto different thread contexts of the same core because much of the hardware resources are shared between SMT thread contexts. This can be a problem when there is more than one thread, but fewer than (one less than) the number of thread contexts: A scheduler can mistakenly schedule two threads on the same core while leaving another core idle.

The default process scheduler in Linux (as of kernel 2.6.34) is CFQ (Completely Fair Queuing). The “tmb” series of kernels optional in Mandriva distributions come with the BFS (Brain Fuck Scheduler) as default. BFS claims to be SMT-aware, much simpler, and lower latency than CFQ, targeted for “interactive” desktop-style use on small systems.

Here, I measure how “SMT-aware” the BFS and CFQ schedulers are at running a simple CPU-intensive workload.

Workload

Dhrystone 2.1 compiled for x86-64 with gcc. This is a simple integer benchmark that has a small memory footprint and no I/O. I run multiple independent instances of Dhrystone at the same time. Interestingly, Dhrystone’s throughput actually falls with hyperthreading, by roughly 4% when running two threads compared to one.

System

- Core i7 860. 4 cores, 2-way SMT

- Mandriva Linux, kernel-tmb-server-2.6.34.7-3mdv. BFS and CFQ process schedulers.

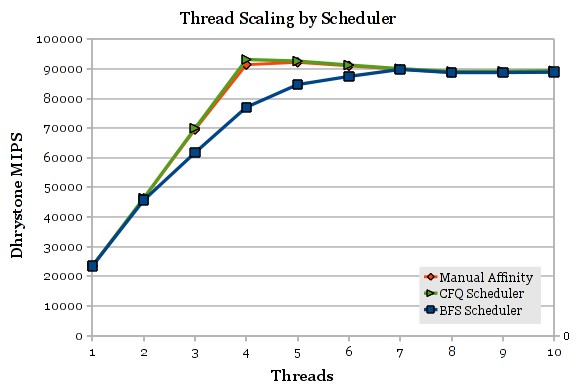

CFQ and BFS

When scheduling threads to cores and thread contexts, there are three trivial cases (for N thread contexts): One runnable thread (placing it on any thread context is the same), and N-1 runnable threads (leave exactly one thread context idle, which one doesn’t matter), and N or more threads (fill every thread context, and rotate between them fairly). With several threads, the ideal scheduling is to first give each thread its own core, and then double up (use both thread contexts on a core) when there are more threads than cores. The “Manual Affinity” option below manually assigns Dhrystone tasks onto processors using this rule.

Both CFS and manual affinity perform the same. On a 4-core, 8-thread system, Dhrystone performance increases linearly when up to 4 instances are run. Beyond 4 instances, throughput drops slightly until 8 instances since Dhrystone performs worse with SMT than without. Most other applications would see a shallow upward slope and gain an extra 15-25% throughput between 4 and 8 instances.

BFS bounces the tasks around different thread contexts and often gets the scheduling wrong, leading to lower performance between 2 and 6 instances. Not good.

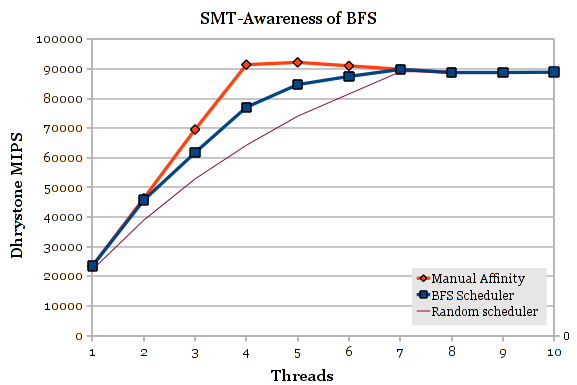

Is BFS SMT-Aware?

If BFS isn’t very good at scheduling for multicore SMT systems, we should ask whether BFS is SMT-aware at all. I compare BFS to a hypothetical random scheduler that randomly assigns threads to thread contexts. The plot is calculated using straightforward probability, assuming a thread runs at full speed when running alone on a core and gains no additional throughput from SMT (which is slightly better than Dhrystone’s -4%), then scaled to match the graph. The Manual Affinity results are also plotted for comparison.

The graph shows that BFS is indeed SMT-aware. It achieves performance roughly halfway between the ideal scheduling and a random scheduler. It’s just not very good at fully utilizing all the cores before allowing threads to double up onto one core.

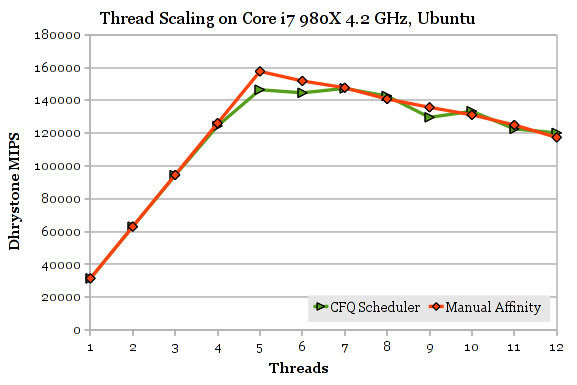

Ubuntu 10.10 and Core i7 980X

Out of curiosity, I ran the same test on a 6-core, 12-thread Core i7 980X overclocked to 4.22 GHz.

The Manual Affinity and CFQ plots are normal between 1 and 4 Dhrystone instances (linear increase), but performance is abnormally slow at 5 or more instances. Reason: The processor exceeds 100°C and is being throttled.