While crafting some C code to stress integer ALU bandwidth, I decided I would compile the code through various compilers to see what would come out. The code is a hand-unrolled loop with 5 independent chains of dependent ALU operations (add, and) designed to provide many independent ALU instructions for the integer core to execute.

I tested the same C code through the following compilers, both 32- and 64-bit, on the same Core i7-3770K system:

- OS X Apple clang version 3.1 (tags/Apple/clang-318.0.61) (based on LLVM 3.1svn)

- OS X gcc version 4.2.1 (Based on Apple Inc. build 5658) (LLVM build 2336.9.00)

- Linux gcc version 4.7.1 (GCC)

- Linux Intel C Compiler Version 12.0.3

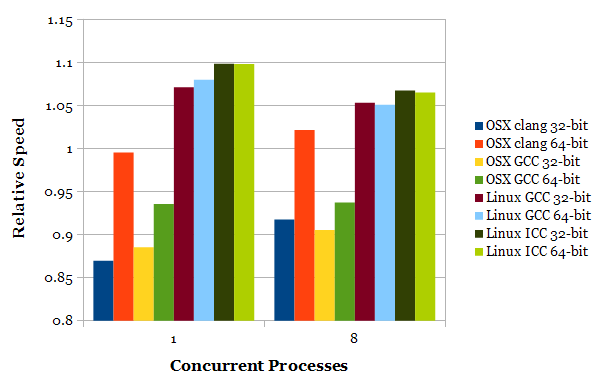

The execution speed is normalized to the geometric mean of all the results.

The two sets of bars show the single-threaded performance as well as the performance when running eight copies of the routine (i.e., with Hyper-Threading). The performance gap between compilers narrows when Hyper-Threading is used, because a second thread can absorb the effects of poor instruction scheduling.

Even for a simple loop, there is a surprising difference in the execution speed of the compiled code. Because the loop is so simple and repetitive, a human can figure out roughly what the optimal output is and compare that to what the compilers generate.

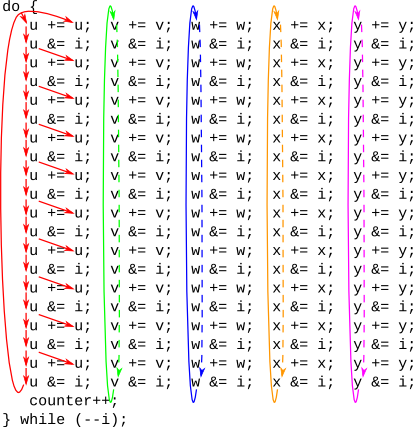

C Source Code

In the above code, i is the loop counter and also used as a non-changing integer value in the computations. There are 5 independent chains of ALU operations, alternating between addition and logical AND. counter is a volatile integer variable that is incremented once per iteration. This routine should be able to provide enough data-independent instructions to execute 5 instructions per clock, which is more than sufficient to saturate today’s processors. The highest value I measured is 2.94 instructions per clock including loop and counting overhead. It’s 3.00 instructions per clock once the loop overhead of 2 (fused) instructions every iteration of 100 ALU operations is removed.

The optimal assembly code after compilation should roughly follow the structure of the C code. It would alternate between 5 (independent) ADD operations and 5 AND, with a few loop-counter instructions inserted somewhere within the loop. The general principle is that data-dependent operations should be spaced out to make it easier for the processor to find instruction-level parallelism.

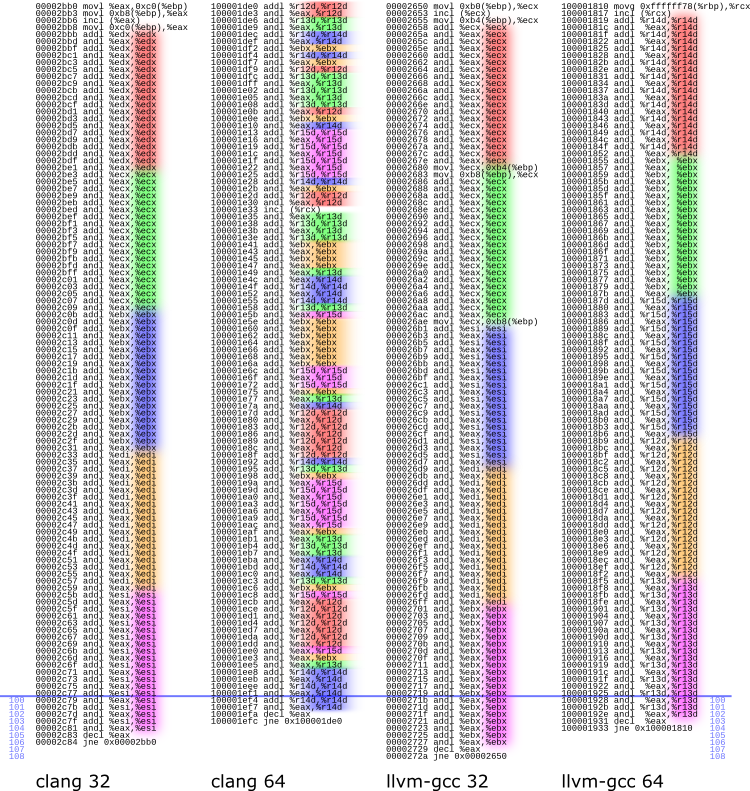

Mac OS X Compilers

Here is the disassembly of the loop compiled on the Mac OS X compilers. The disassembly is in AT&T syntax, so the destination operand is the one on the right. The instructions operating on each “chain” of instructions is colour-coded the same as in the C source code.

It appears that for both Clang and GCC, the Apple compilers use LLVM for optimization and code generation, with Clang and GCC only being front-ends. This might explain the similarity in instruction scheduling strategies between the two compilers: There is a tendency to group dependent chains of instructions together, making it hard for the processor to extract ILP. Only the 64-bit LLVM-Clang has some amount of interleaving of instructions, which leads to a significant performance improvement compared to the other three compilers.

Given the poor instruction scheduling of 32-bit LLVM-Clang and both 32- and 64-bit LLVM-GCC, it’s no surprise these three perform worst. So what distinguishes between these three?

In 32-bit LLVM-Clang, the first 6 instructions in the loop have many long-latency data dependencies. The value of eax is modified at the bottom of the loop (loop-carried dependency at instruction #105), then stored to memory (instruction #1), loaded again (#4), and is immediately consumed (#6). There wasn’t even an attempt to space out the long-latency store-load-use operations, and this hurts performance.

32-bit LLVM-GCC generates nearly the same code with the same problems as 32-bit LLVM-Clang, but backing up the register value (instruction #1 in the LLVM-Clang code) is done in the middle of the loop (instruction #24).

64-bit LLVM-GCC’s output is significantly better. There are more registers in x86-64 so register spilling is no longer necessary, and the long-latency memory operations are no longer data-dependent with the ALU operations. It still suffers from poor instruction scheduling.

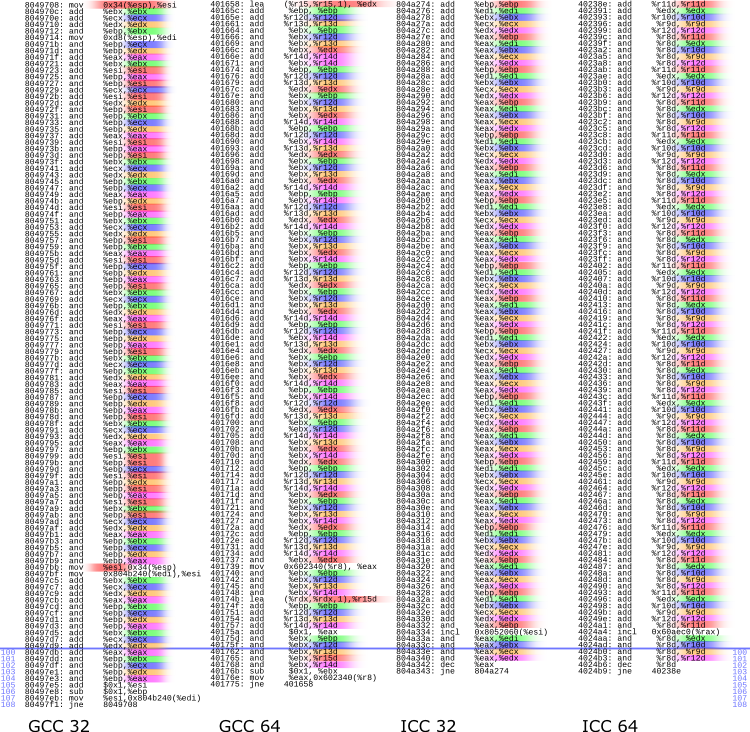

Linux Compilers

The Linux compilers perform quite well, and the disassembly clearly shows that instruction scheduling is much improved compared to the LLVM-generated code.

32-bit GCC (real GCC this time, not an LLVM backend) shows some irregularity in the instruction interleaving. It spills one register to memory, and does that fairly intelligently. The register is spilled at instructions #87-88 and not consumed until instruction #105. Likewise, the same register is spilled again at instruction #1 but not consumed until instruction #11. Despite needing to spill a register to memory, its performance impact is fairly small.

64-bit code allows GCC to have enough registers to avoid spilling to memory…maybe too many. In instructions #1 and #92, it needlessly switches between using edx and r15d to hold the same variable. Like 32-bit GCC, 64-bit GCC also unnecessarily splits up the counter increment into three instructions (load #87, add #97, store #104). Because it does not need to spill registers to memory, GCC’s instruction scheduling spaces out dependent instructions well, although seemingly with some randomness.

Both the 32-bit and 64-bit Intel compilers generate nearly identical code, except for register assignment, and therefore, code size. Instructions are perfectly interleaved, with maximal spacing between dependent instructions. Incrementing the volatile counter uses a pointer stored in a register (instruction #97) with the destination operand in memory, with no register needed to temporarily store the counter value. This saves one register, making 32-bit ICC to be the only 32-bit compiler that does not spill any registers to memory for this routine. The use of dec-jne (instructions #102-103) to terminate the loop also allows macro-op fusion to work.

Register Spilling

LLVM-Clang and LLVM-GCC seem to interpret the volatile differently from the GCC and Intel C compilers. The counter variable was declared as volatile unsigned int &counter. LLVM-Clang and LLVM-GCC interpret this as meaning that both the pointer itself is volatile, as well as the value to which it points. This leads to code which loads the value of the pointer from memory, then increments the integer located in memory using that pointer. GCC and Intel’s compiler interprets the volatile declaration as meaning only the final integer is volatile. It keeps the pointer in a register, and simply increments the integer located where the pointer points. This reduces register usage by one. GCC wastes one register by splitting up counter++ into three instructions instead of using a destination operand in memory, so the 32-bit Intel compiler is the only compiler that generates 32-bit code that does not spill any registers to memory.

Instruction Scheduling

It seems like this version of LLVM gets it wrong (it tends to group dependent operations together), while GCC and ICC do it right.

Leave a Reply