See also: Ivy Bridge Benchmarks

Simultaneous multithreading (SMT, or Intel Hyper-Threading) is a method of improving the utilization and throughput of a processor by allowing two independent program threads to share the execution resources of one processor, so when one thread stalls the processor can execute ready instructions from a second thread instead of sitting idle. Because only the thread context state and a few other resources are replicated (unlike replicating entire processor cores), the throughput improvement depends on whether the shared execution resources are a bottleneck and is typically much less than 2x with two threads.

Currently, Intel uses HT as a feature for market segmentation: The desktop Core i5 processors differ from the Core i7 mainly by whether HT has been disabled, and Intel charges a significant price premium for the Core i7. Therefore, I want to know what the performance benefit of HT is. Since my workloads usually involve running many independent single-threaded processes on a cluster of machines, these measurements use multiple processes, not single-process multithreaded workloads.

Hardware

| Processor | Intel Core i7-860 (4 cores, 8 threads, 3.2 GHz Turbo disabled, 8 MB L3 cache, Lynnfield) |

|---|---|

| Memory | 8 GB DDR3 1600 @ 1530 |

Workloads

| Workload | Description |

|---|---|

| Dhrystone | Version 2.1. A synthetic integer benchmark. Compiled with Intel C Compiler 11.1 |

| CoreMark | Version 1.0. Another integer CPU core benchmark, intended as a replacement for Dhrystone. Compiled with Intel C Compiler 12.0.3 |

| Kernel Compile | Compile kernel-tmb-2.6.34.8 using GCC 4.4.3 |

| VPR | Academic FPGA packing, placement, and routing tool from the University of Toronto. Modified version 5.0. Intel C Compiler 11.1 |

| Quartus | Commercial FPGA design software for Altera FPGAs. Compile a 6,000-LUT circuit for the Stratix III FPGA. Includes logic synthesis and optimization (quartus_map), packing, placement, and routing (quartus_fit), and timing analysis (quartus_sta). Version 10.0, 64-bit. |

| Bochs | Instruction set (functional) simulator of an x86 PC system. This benchmark runs the first ~4 billion timesteps of a simulation of a system booting Windows XP. Modified version 2.4.6. GCC 4.4.3 |

| SimpleScalar | Processor microarchitecture simulator. This test runs sim-outorder (a cycle-accurate simulation of a dynamically-scheduled RISC processor), simulating 100M instructions. Version 3.0. Compiled with GCC 4.4.3 |

| GPGPU-Sim | Cycle-level simulator of contemporary GPU microarchitectures running CUDA and OpenCL workloads. Version 3.0.9924. |

Throughput Scaling with Multiple Threads

With the exception of the kernel compile workload, all of these tests start multiple instances of the same task and measures the total throughput of the processor (number of tasks/average runtime for task). Kernel compile uses “make -j” to run multiple instances of GCC to independently compile each file, and the time to compile the entire kernel is measured.

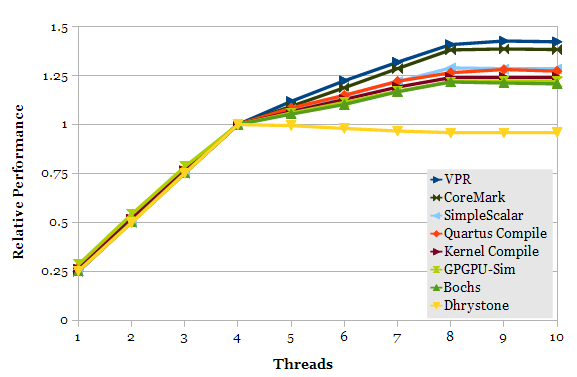

The number of simultaneous tasks are varied and plotted. For workloads that are not memory-bound, we expect roughly linear improvement in throughput between 1 and 4 threads (for a 4-core processor), less improvement between 4 and 8 threads (the additional benefit of HT), and roughly no change in throughput beyond 8 threads (these tasks have little IO).

This line plot shows all of the data in one plot. The workload throughput scales reasonably close to linear with the number of real cores they use (1 to 4 threads), while throughput improvements due to HT vary between workloads. Interestingly, Dhrystone throughput decreases with HT, while CoreMark has the second-highest gain (behind VPR), yet both of them are small integer benchmarks that have little main memory traffic.

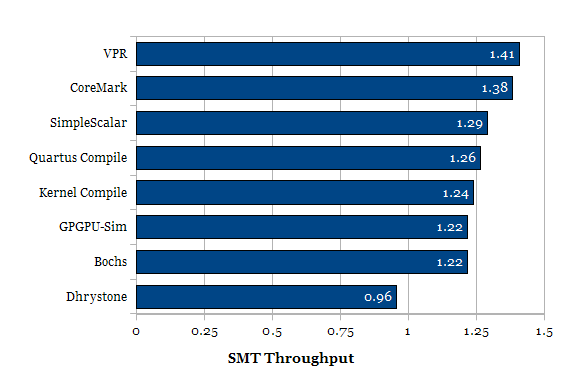

Hyper-Threading Throughput Scaling

This chart focuses on comparing the throughput at 8 threads vs. 4 threads for the different workloads. The median improvement for HT is 25%.

Multicore Throughput Scaling

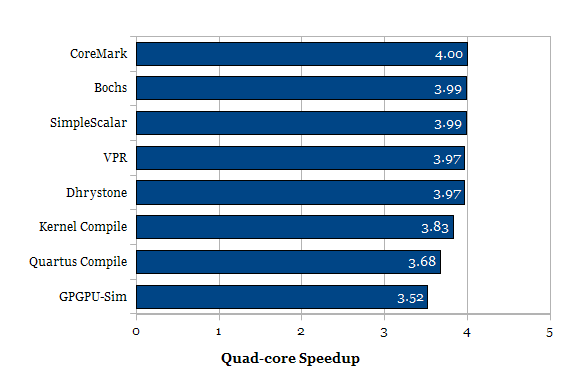

This plot compares the throughput at 4 threads (1 thread of each core used) vs. 1 thread. If independent processes are executing on independent cores, we would expect to see 4x improvement in throughput when running 4 copies of the workload. In the Core i7, the L3 cache and memory system are shared between cores. Scaling less than 4x with 4 independent threads indicates that the workload is sensitive to L3 cache size or memory system bandwidth.

Note that the kernel compile workload isn’t strictly independent, so sub-linear scaling does not necessarily mean GCC is sensitive to cache size or memory system bandwidth. The kernel compile workload compiles different files in parallel, with some dependencies between tasks.

Most of the workloads scale close to 4x with 4 cores. Other than kernel compile, Quartus and GPGPU-Sim workloads scale significantly worse than linear. Quartus is known to be sensitive to memory performance. I don’t know about GPGPU-Sim’s characteristics, but this might be a hint that it, too, has fairly random access patterns on a large memory working set.

Core i5 or Core i7?

The above measurements were made using a Lynnfield Core i7, but future purchasing decisions would be for the next-generation Sandy Bridge. It is unknown how closely the performance gains for HT on Sandy Bridge processors match the HT gains for Lynnfield, although I would expect them to be similar.

As of today, a Sandy Bridge Core i7-2600K costs around $300, while a Core i5-2500K costs $210. The system cost is around 22% higher for the Core i7-2600K assuming each node has the same amount of RAM (so memory/thread is half on the HT system compared to the non-HT system). This indicates that price-performance is slightly higher for Core i7 with HT (22% more price for 25% more performance) when running cluster-type workloads. However, because price-performance is so close, there are other issues to consider:

- Hyper-Threading requires twice as many threads to achieve peak CPU utilization, requiring each system to have twice the amount of RAM to keep memory/thread constant. Cluster-type workloads run independent processes that don’t share memory, so memory consumption is nearly linear with the number of threads. Doubling the RAM for a HT system further adds to the system cost.

- Hyper-Threading creates the potential for load imbalance, where one node has more tasks than physical cores (is using HT) while another node has physical cores idling. This is the same scheduling problem as discussed in my post on SMT-aware process schedulers, but extended to scheduling between different compute nodes. This could be significant for long-running jobs, although with 4C-8T, the likely impact of this should be small (Probability theory escapes me for the moment, I don’t know how much).

- Hyper-Threading should be a net power-performance win. HT does consume significantly more power when used, but I believe it’s less than 20%. Will need measuring.

- Although a net power win, HT CPUs have higher power density and are harder to cool. For temperature-limited overclocks, a non-HT CPU will likely clock slightly higher, even if total system power is higher.

- Hyper-Threading is a performance-density win, because the alternative of having more nodes in a non-HT cluster occupies more space, even if it doesn’t cost more.

Conclusion: I still don’t know…