After having a 25/7 Mbps DSL line installed (really only 25/3.6 Mbps), I started noticing some performance issues that resembled improper MTU settings that only occurred when multilink PPP was used. It turns out there was “packet loss” associated with fragmenting packets.

Speedtest.net Numbers

| ISP | Downstream Mbps | Upstream Mbps |

|---|---|---|

| Bell PPP | 24.7 +/- 1.7 | 3.61 +/- 0.02 |

| Teksavvy PPP | 24.3 +/- 1.2 | 3.62 +/- 0.005 |

| Teksavvy MLPPP | 19.9 +/- 3.2 | 3.55 +/- 0.12 |

The speedtest.net numbers were measured using the same DSL line (different logins) using the Nexicom server, which is even closer through Teksavvy’s routing than Bell’s. This is the average of many tests over two days, with average and standard deviation of the results posted here. This is using an MTU of 1485, which works around all of the fragmentation issues. Multilink performs noticeably worse than the others.

Packet Loss and Fragmentation

Teksavvy negotiates the following options over LCP:

- MRU 1492

- MRRU 32719

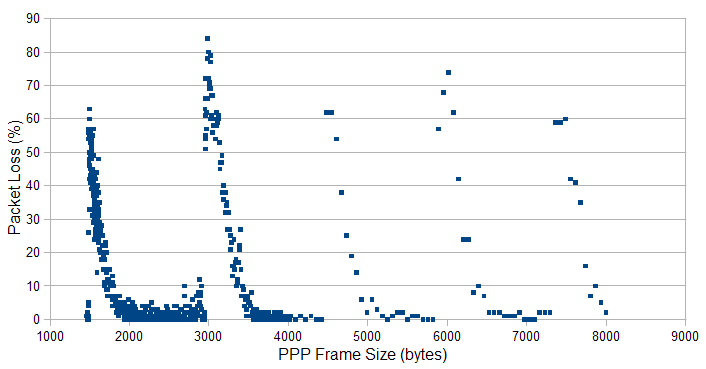

This is a plot of packet loss rate vs. PPP frame size. With a large MRRU, the upstream direction consists of a single large ICMP echo request packet encapsulated into a single large PPP frame, then fragmented at the PPP layer into multiple MLPPP frames. In the downstream direction, Teksavvy does not fragment PPP frames, but fragments the IP packet with each fragment in its own PPP frame. MLPPP frames not greater than 1485 bytes are not fragmented. With MRRU > MRU, we can experiment with both PPP and IP fragmentation.

The periodic spikes in packet loss are abnormal. The spikes correspond to packet sizes at which the ICMP response packet is slightly larger than a multiple of the IP MTU, so there is a small fragment left over after fragmentation. It is the presence of these small fragments that cause packet loss.

Reordering PPP frames causes packet loss

The MLPPP session violates RFC 1990 Section 4.1 by delivering PPP frames out of order. When a large packet is followed by a tiny fragment, the PPP frame containing the small fragment often arrives sooner than the PPP frame containing the preceding large fragment. RFC 1990 forbids out of order MLPPP frames, so the only choice is to discard frames that arrive out of order, which causes the observed “packet loss”.

The same symptoms occur in both upstream and downstream directions, regardless of whether the small frames originated from IP packet fragments or PPP frame fragments. The reordering happens at the PPP layer: The IP fragment offsets are in-order relative to the MLPPP sequence number. It appears this PPP frame reordering is done by Teksavvy: Using the same DSL line, modem, and configuration, a Teksavvy login will reorder small packets ahead of a big one, but a Bell DSL login will not.

With plain IP on PPP (no PPP multilink, compression, nor encryption), this isn’t a huge issue. PPP doesn’t notice when packets are out of order, and IP doesn’t care. Out of order packet delivery breaks everything else, however.

For MLPPP, I think other than getting the PPP layer to deliver packets in order, the next best thing is to avoid creating tiny frames to reduce the probability of getting a reordered packet. Reducing fragmentation should help, but it probably won’t help if tiny ACK packets are being reordered too. MLPPP requires that the underlying PPP frames are delivered in order as RFC 1661 requires: mostly-in-order isn’t sufficient.

It looks like this might be an attempt to improve performance by prioritizing small frames (likely to be ACK packets). But the PPP layer would be the wrong place to do this.

Reducing fragmentation via MRU/MRRU options

If the network will deliver tiny MLPPP frames out of order, we can avoid some of the penalty by reducing the number of small frames. We can’t affect how many small IP packets (and empty ACKs) are sent, so the best we can do is avoid IP or PPP fragmentation.

There are two significant sources of fragmentation: Downstream IP packet fragmentation, and upstream PPP frame fragmentation. It appears Teksavvy’s router does not do downstream PPP frame fragmentation, and upstream IP fragmentation should be rare if Path MTU detection works normally.

Upstream

- On single-link bundles, when MRRU > (MRU – headers(6 bytes)), large PPP frames are fragmented to fit in the MRU. Since the MRRU is used as the IP-layer MTU, PPP frame fragmentation can be avoided by lowering the server MRRU to (1492-6 = 1486), keeping MRU at 1492.

Workaround: Set the client IP-layer MTU to 1486 manually to avoid upstream PPP frame fragmentation.

- Additionally, the Linux kernel (2.6.31-rc6 and newer) seems to have a bug where it fragments PPP frames into MLPPP fragments 2 bytes smaller than allowed by the MRU. (drivers/net/ppp_generic.c: ppp_mp_explode()) Lowering the server MRRU (or client IP MTU) to 1484 may be even better.

Downstream

- It appears the client’s MRU is used incorrectly by Teksavvy’s router. The MRU value should apply to the PPP payload, but Teksavvy’s routers appear to use it for the IP layer’s MTU without subtracting off the 6-byte multilink headers. Because of this, Teksavvy’s routers will send PPP frames that are up to 6 bytes longer than the client’s MRU. The routers handle the MRRU option correctly. Normally, the client MRRU is used for the IP MTU and the PPP layer will fragment frames to fit within MRU, but Teksavvy does not fragment PPP frames.

Workaround: At least one of the routers (206.248.154.106) seems to have hard-coded a maximum IP-layer MTU of 1486. Otherwise, the client MRRU should be set to 1486 (with MRU=1492).

- Teksavvy’s workaround has a side effect when multilink is disabled. When the MTU is really 1492, the router fragments IP packets at 1486 anyway.

- This IP-layer fragmentation ignores the don’t-fragment DF bit. This breaks Path-MTU discovery.

To Teksavvy: I think the DF bit should be respected, but this might make bad

configurations fail even harder.

- There is no hard-coded 1486-byte IP-MTU on 206.248.154.103. Workaround: The client should ask for a 1486-byte MRRU because the 1492-byte MRU option is handled incorrectly.

Summary

Client:

- Set IP-MTU to 1486 (or 1484 on Linux 2.6.31+) to avoid upstream PPP frame fragmentation (#1)

- Set MRU=1492. This is the correct value even though Teksavvy doesn’t handle it correctly.

- Set MRRU to 1486 because Teksavvy does not correctly handle a MRU of 1492. (#2)

Teksavvy:

- Setting server MRRU to 1486 (or 1484 for Linux clients) would avoid upstream PPP fragmentation without the client manually setting his MTU. (#1)

- MRU should be 1492 as it is now.

- Hard-coding IP-MTU to 1486 bytes is a good workaround for MLPPP use, but not so good for non-MLPPP users who want IP-MTU=1492, but please respect the DF flag! (#3)

I have Teksavvy and a similar setup. Thanks for taking the time to write this, I really enjoyed your article. Cheers